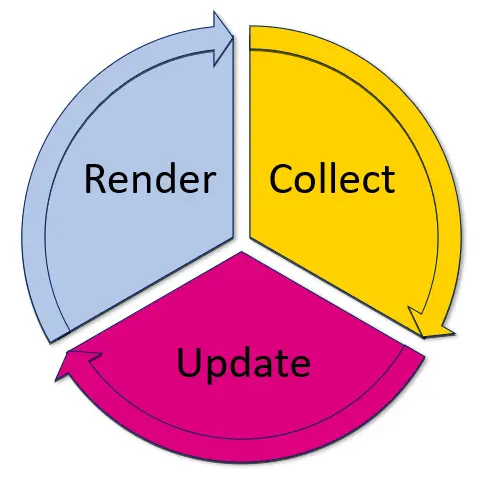

Main Loop

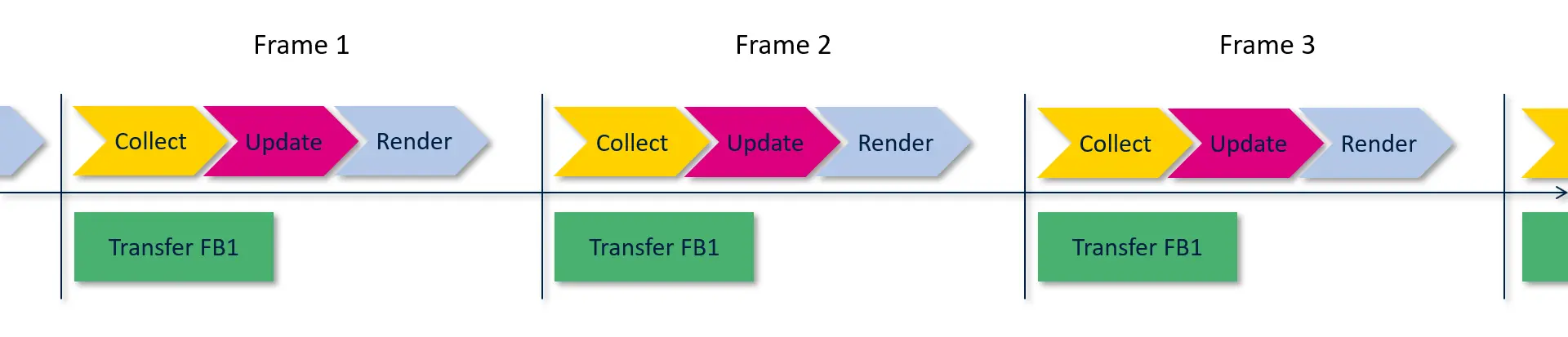

In this section you will learn more about the workings of the graphics engine in TouchGFX and in particular the main loop. Recall that the main task for the graphics engine is to render the graphics (the ui model) of your application in to the framebuffer. This process happens over and over again to produce new frames on the display.

The graphics engine collects external events like display touches or button presses. These events are filtered and forwarded to the application. The application can use these events to update the ui model. E.g. by changing a button to its pressed state when the user touches the screen over the button, and later changing the button back to the released state when the user does not touch the screen anymore.

Finally the graphics engine renders the updated model to the framebuffer. This process loops forever.

After rendering a frame, the framebuffer is transferred to the display, where the user can see the graphics. The transfer to the display must be synchronized with the display to avoid disturbing glitches on the display. For some displays the transfers must happen regularly with a minimum time inverval. For other displays the transfer must happen when a signal is sent from the display.

The graphics engine implements this synchronisation by waiting for a "go" signal from the hardware abstraction layer. Read more about the hardware abstraction layer here

In pseudo code the main loop inside the TouchGFX graphics engine looks like this:

while(true) {

collect(); // Collect events from outside

update(); // Update the application ui model

render(); // Render new updated graphics to the framebuffer

wait(); // Wait for 'go' from display

}

The code is more involved in the real implementation, but the pseudo code above helps in understanding the main parts of the engine.

We will discuss these four stages in more detail below.

Collect

In this phase the graphics engine collects events from the outside environment. These events are typically touch events and buttons.

TouchGFX samples and propagates events to the application. The raw touch events are converted into more specific touch events:

- Click: The user pressed or released his finger from the display

- Drag: The user moved his finger on the display (while touching the display).

- Gestures: The user moved his finger fast in a direction and then released. This is called a swipe and is recognized by the graphics engine.

The events are forwarded to the ui elements (e.g. widgets) that are currently active.

The engine also forwards a tick event. This event represents the new frame (or the step in time), and is always send, also if there was no other external input. This event is used by applications to drive animations, or other timebased actions like changing to a pause screen after a specific time has elapsed.

Update

Here the graphics engine works together with the application to update the ui to reflect the collected events. The graphics engine knows which Screen is currently active and passes events to this object.

The basic principle is that the engine informs the application (i.e. the Screen and Widget objects in the ui model) about the events. In response, the application requests specific parts of the display to be redrawn. The application does not draw directly as response to the events, it changes the properties of Widgets and request redraws.

If for example a Click event occurs, the graphics engine searches the scene model of the Screen object to find the Widget that should receive the event. Some Widgets like Image and TextArea do not wish to receive Click events. They further have an empty event handler, so nothing happens.

Other Widgets like Button reacts to a Click event (pressed or released). The Button widget changes its state to show another image when pressed, and changes the state back again when the touch is released again.

When a Widget like the Button changes it state, it must also be redrawn in the framebuffer. The Widget is responsible for communicating this back to the graphics engine as response to the event. The graphics engine does not itself redraw any Widgets based on the collected events. The Widgets keep track of their own internal state (for a Button, what image to draw), and instruct the graphics engine to redraw the part (a rectangle) of the display that is covered by the Widget.

The application itself can also react to the events. One of two ways are common:

- Configure an interaction for a Widget in TouchGFX Designer For example, we can configure an interaction to make another Widget visible when the Button is pressed. This interaction is executed after the Button has changed its state and requested a redraw of itself from the graphics engine. If you use the interaction to show another (invisible) Widget, the application should also request a redraw from the graphics engine.

- React to events on the Screen It is also possible to react to events in the Screen itself. The event handler are virtual functions on the Screen class. These functions can be reimplemented in the Screens in the application. This can e.g. be used to perform an action whenever the user touches the screen no matter where if the touch is on a Widget.

The Screen class has the following event handlers. These are called by the graphics engine when the corresponding external event has been collected:

framework/include/touchgfx/Screen.hpp

virtual void handleClickEvent(const ClickEvent& evt);

virtual void handleDragEvent(const DragEvent& evt);

virtual void handleGestureEvent(const GestureEvent& evt);

virtual void handleTickEvent();

virtual void handleKeyEvent(uint8_t key);

Any C++ code can be inserted in these event handlers. Typically applications update the state of some Widgets and/or call some application specific functions (business logic).

Time based updates

The handleTickEvent event handler is called in every frame. This allows the application to perform time based updates of the user interface. An example could be to fade a Widget away after 10 seconds. Assuming that we have 60 frames in a second, the code could look like:

void handleTickEvent() {

tickCounter += 1;

if (tickCounter == 600) {

myWidget.startFadeAnimation(0, 20); // Fade to 0 = invisible in 20 frames

}

}

The graphics engine also calls an event handler on the Model class. This event handler is typically used to perform repeated actions like checking message queues or sampling GPIO:

void Model::tick() {

bool b = sampleGPIO_Input1(); // Sample polled IO

if (b) {

...

}

}

Requesting a redraw

As we discussed above with the Button example; the Widgets are responsible for requesting a redraw when their state changes. The mechanism behind this is called an invalidated area.

When the Button changes state (e.g. from released to pressed) and needs a redraw, the area covered by the Button Widget is an invalidated area. The graphics engine keeps a list of these invalidated areas requested for the frame. All the collected events (touch, button, tick) may result in one or more invalidated areas, so there can be many invalidated areas in every frame.

The event handlers on the Screen class can also request a redraw of an area. Here we change the color of a Box widget, box1, in frame 10 and request a redraw by calling the invalidate method on the Box:

void handleTickEvent() {

tickCounter += 1;

if (tickCounter == 10) {

box1.setColor(Color::getColorFrom24BitRGB(0xFF, 0x00, 0x00)); // Set color to red

box1.invalidate(); // Request redraw

}

}

In this example the graphics engine will call the handleTickEvent handler in every frame. In the 10th frame, the application code requests a redraw of the area covered by box1. As a response to this the graphics engine will redraw that area in the framebuffer using the color saved in the box1 widget.

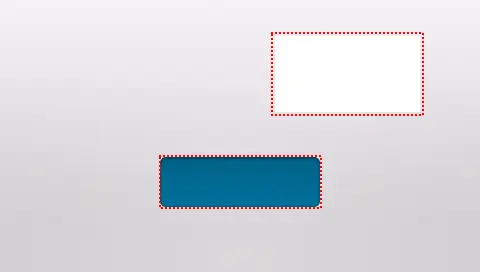

In the user interface below we have a Button Widget and a Box Widget on top of a background image. If we insert an interaction on the Button to change the color of the Box when the Button is clicked we get two invalidated areas (indicated with red) when the user clicks the Button:

The area of the Box is invalidated to get the new color drawn in the framebuffer. The Button also invalidates itself to get the released state drawn again.

Render

As we just discussed, the result of the update phase is a list of areas to redraw, the invalidated areas. The task for the render phase is basically to run through this list and draw the Widgets covering these areas into the framebuffer.

This phase is handled automatically by the graphics engine. The application has defined the scene model (the Widgets in the ui) and invalidated some area. The rest is handled by the engine.

The graphics engine handles the invalidated areas one-by-one. For each area the engine scans the scene model and collects a list of the widgets that is covered by the area (partly or in whole).

Given this list of Widgets the graphics engine calls the draw method on the Widgets. Starting with the widget in the background and ending with the foremost Widget.

The Widget's draw methods use the state of the Widget, e.g. the color, when drawing to the framebuffer. Any information that is needed to draw the Widget must be saved to the Widget during the update phase. Otherwise this information is not available in the rendering phase.

Wait

The TouchGFX graphics engine waits for a signal before updating and rendering the next frame. There are two reasons for waiting between the frames instead of just continously rendering frames as fast as possible:

The rendering is synchronized with the display. As mentioned above some displays requires that the framebuffer is transmitted repeatedly. While this transmission is ongoing, it is not adviseable to render arbitrarily to the framebuffer. The graphics engine therefore waits for a short time after the transmission is started before starting the rendering. Other displays send a signal to the microcontroller when the framebuffer should be transmitted. The graphics engine waits for that signal.

Frames are rendered at a fixed rate. It is often benificial for the application that frames are rendered at a fixed rate, as this makes it easier to create animations that lasts a specific time. For example, if you have a 60 Hz display, a two seconds animation should be programmed to complete in 120 frames.

The time where the graphics engine is waiting is typically used by other lower priority processes in the application. In these cases the time is not wasted, as the lower priority processes should run at some point in time anyway.

Handling the framebuffers

Recall from the discussion previously that the graphics engine synchronizes with the display before the framebuffer is updated. After the rendering to the framebuffer the engine also needs to make sure that the display shows the updated framebuffer.

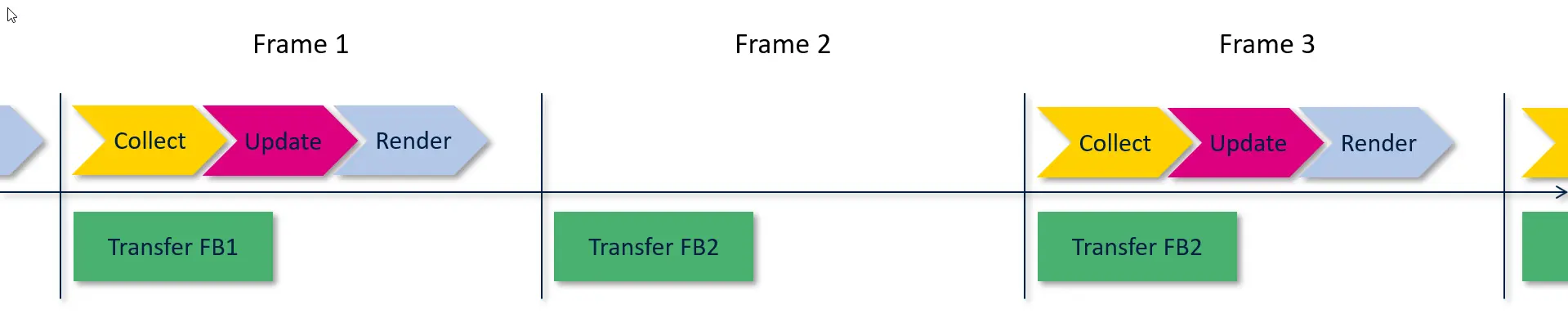

Two framebuffers

In the simplest setup we have two framebuffers available. The graphics engine alternates between the two framebuffers. While drawing a frame into a framebuffer, the other framebuffer is transferred to (and shown on) the display.

In this drawing we assume a parallel RGB display connected to the LTDC controller. This means that a framebuffer must be transmitted to the display in every frame. As we have two framebuffers the graphics engine can draw into one framebuffer while the other framebuffer is being transmitted. This scheme works very well and is preferred if possible.

As the graphics engine is drawing in every frame we also transmit a new framebuffer in all frames in the above drawing.

There will often be frames where the application is not updating anything. This implies that nothing is rendered. The same framebuffer is therefore transmitted again in the succeeding frame.

The application is not drawing anything in frame 2, so the graphics engine retransmits framebuffer 2 again in frame 3.

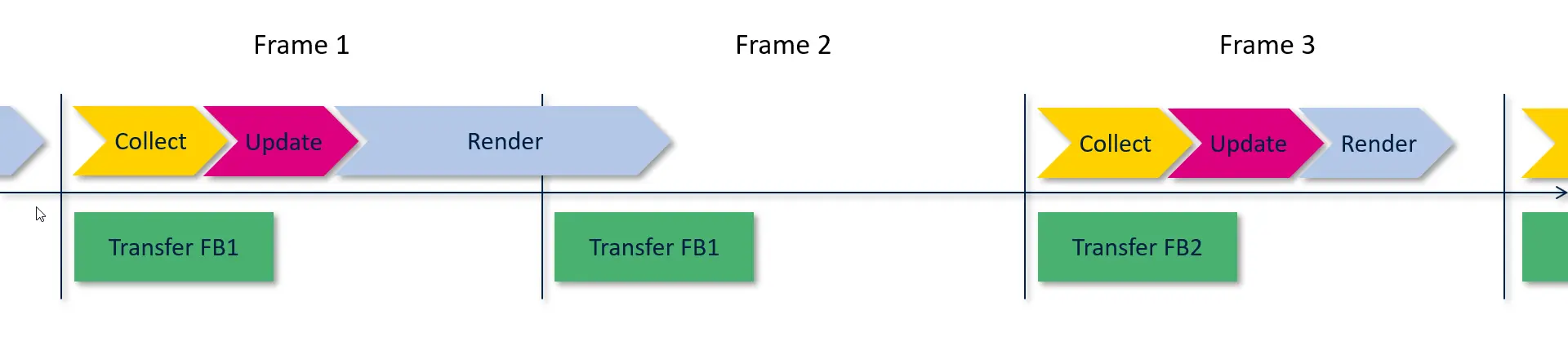

The typical parallel RGB display has a refresh rate around 60 Hz. This update frequence must be maintained by the microcontroller. This update frequency means that we have 16 ms to render a new frame before the transmit begins again. In some cases the time to render a new frame is more than 16 ms. In this case the graphics engine just retransmit the same frame again (as before):

The rendering of frame 1 takes more than 16 ms, so the frame 0 previously rendered to framebuffer 1 is retransmitted. The new frame in framebuffer 2 is transmitted in frame 3. When two framebuffers are available, the rendering time can be very long. The previous frame is retransmitted until the new frame is available.

One framebuffer

In some systems there is only memory for one framebuffer. If we have a parallel RGB display we are thus forced to transmit framebuffer 1 in every frame.

This can be problematic because the graphics engine is forced to draw into the same framebuffer that we are also transmitting to the display at the same time. If this is done without care there is a high risk that the display shows a frame that is a mix of the previous frame and the new.

One solution is to hold back the drawing until the transfer is complete and only draw in timeslot before the transfer starts again. This yields little time to draw as the transfer takes up a significant part of the overall frame time. Another drawback is that incomplete frames (tearing) might still occur if the drawing is not complete when the next transfer starts.

A more potential solution is to keep track of how much of the framebuffer is already transmitted and then limit the rendering to the appropriate part of the framebuffer. As the transfer progresses more and more of the framebuffer is available for the rendering algorithms.

The graphics engine contains algorithms that helps the programmer to ensure that the drawing is performed correctly.

The application updates and renders the framebuffer in every frame:

The framebuffer is retransmitted unchanged if nothing is updated in a frame.

If the rendering time is longer than 16 ms the rendering has not finished when the retransmission starts again:

In this situation the graphics engine must make sure that the parts that is being transmitted is rendered completely. Otherwise the display will show the unfinished framebuffer.

In the next section we will discuss the rendering time for the individual Widgets. This will help the programmer to write applications of high performance.