Graphics Engine

TouchGFX graphics engine's main responsibility is drawing graphics on the display of an embedded device.

This section will give an overview of what kind of graphics engine TouchGFX is and provide some background on why it is this way.

Scene model

Graphics engines can be divided into two main categories.

- Immediate mode graphics engines provide an API that enables an application to directly draw things to the display. It is the responsibility of the application to ensure that the correct drawing operations are invoked at the right time.

- Retained mode graphics engines let the user manipulate an abstract model of the components being displayed. The engine takes care of translating this component model into the correct graphics drawing operations at the right times.

TouchGFX follows the retained mode graphics principles. In short this means that TouchGFX provides a model that can be manipulated by the user and TouchGFX then takes care of translating from this model into an optimized set of rendering method calls.

The benefits of TouchGFX being retained are many. Primary ones are:

- Ease of use: A retained graphics engine is easy to use. The user adresses the configuration of components on screen, by invoking methods on the internal model and does not think in terms of actual drawing operations.

- Performance: TouchGFX analyses the scene model and optimizes the drawing calls needed to realize the model on screen. This includes deliberately not drawing hidden components, drawing and transferring only changed parts of components, managing framebuffers, and much more.

- State management: TouchGFX keeps track of which part of the scene model is active. This in turn makes it easier for the user to optimize the scene model contents.

The main drawback of TouchGFX adhering to the retained mode graphics scheme is:

- Memory consumption: Representing the scene model takes up some memory. TouchGFX reaches its performance levels, typically rendering 60 frames per second, by analyzing the scene model and optimizing the corresponding rendering done. Great effort has gone into reducing the amount of memory used by the scene model of TouchGFX. In typical applications the amount of memory for this model is well below one kilobyte.

Manipulate the model

The scene model consists of components.

Each of the components in the model has exactly one associated parent component. The parent component itself is also part of the scene model. Such a model is widely referred to as a tree.

We will often refer to a component in this tree as a UI component or a widget.

From the point of view of the application, the displayed graphics are updated by setting up and manipulating the widgets in the scene model. An example setup of a button, with position, images and added to the scene model, is:

myButton.setXY(100,50);

myButton.setBitmaps(Bitmap(BITMAP_BUTTON_RELEASED_ID), Bitmap(BITMAP_BUTTON_PRESSED_ID));

add(myButton);

Issue drawing commands

Ultimately, when rendering the scene model, TouchGFX will utilize its drawing API. This drawing API has methods for drawing graphics primitives, such as boxes, images, texts, lines, polygons, textured triangles, etc.

As an example, the Button widget in TouchGFX, when rendered, uses the drawPartialBitmap method for drawing images. The source code for the drawing of the button widget in TouchGFX looks close to:

touchgfx/widgets/button.cpp

void Button::draw(const Rect& invalidatedArea) const

{

// calculate the part of the bitmap to draw

api.drawPartialBitmap(bitmap, x, y, part, alpha);

}

inspect the actual source in touchgfx/widgets/button.cpp for details.

TouchGFX includes optimized implementations of the drawing API. The drawPartialBitmap method for instance utilizes the underlying hardware (Chrom-ART if available) to draw the bitmap.

One can utilize these API drawing methods when extending the scene model with new types of widgets. See the section on creating your own custom widget.

The implementation of the drawing API methods is platform specific and highly optimized for each specific MCU.

Main Loop

The workings of many game engines, graphics engines and in particular TouchGFX can be thought of as an infinite loop.

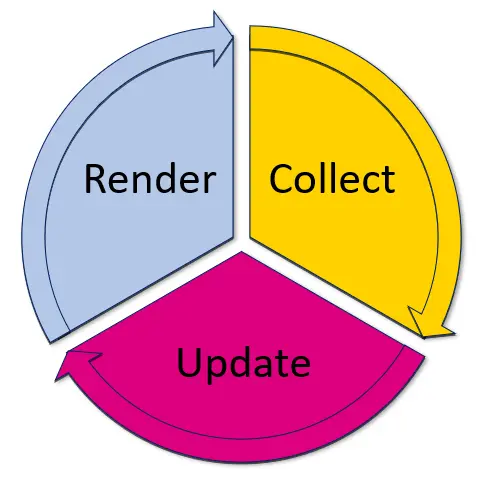

Within the main loop of TouchGFX there are three main activities:

- Collect events: Collect events from the touch screen, presses of physical buttons, messages from backend system, ...

- Update scene model: React to the collected events, updating the positions, animations, colors, images, ... of the model

- Render scene model: Redraw the parts of the model that has been updated and make them appear on the display

A diagrammatic version of the main loop is:

Each execution of the main loop is denoted a tick of the application.

Platform adaptibility

As TouchGFX is designed for running on all STM32 embedded setups the above activities can be tailored.

- The collection of events is handled by a dedicated abstraction layer. The tailoring of this layer is the subject of the section on TouchGFX AL Development.

- The updating of the model is completely up to the application. The details on how to do this update is the subject of UI Development.

- The rendering of graphics to the framebuffer is handled by TouchGFX and will in general not need to be customized. The transferring of the pixels in the framebuffer to the display is platform specific, see Board Bring Up and TouchGFX AL Development for how to customize this to specific platforms.

Read on to get more specifics on the main loop of TouchGFX.