Abstraction Layer Architecture

As described in the previous section, the TouchGFX AL has a particular set of responsibilities. Responsibilities are either implemented in the hardware part of the AL (HAL) or the part of the AL that synchronizes with TouchGFX Engine, typically through an RTOS (OSAL). The following table summarizes these responsibilities which were outlined in the previous section:

| Responsibility | Operating system or Hardware |

|---|---|

| Synchronize TouchGFX Engine main loop with display transfer | Operating system and hardware |

| Report touch and physical button events | Hardware |

| Synchronize framebuffer access | Operating system |

| Report the next available framebuffer area | Hardware |

| Perform render operations | Hardware |

| Handle framebuffer transfer to display | Hardware |

Each of the following subsections highlight what should be done to fulfill the above responsibilities. For custom hardware platforms the TouchGFX Generator, inside STM32CubeMX, can generate most of the AL and accompanying TouchGFX project. The remaining parts, that the AL developer must implement manually, are pointed out through code comments and notifications through the TouchGFX Generator. Read more about the TouchGFX Generator in the next section.

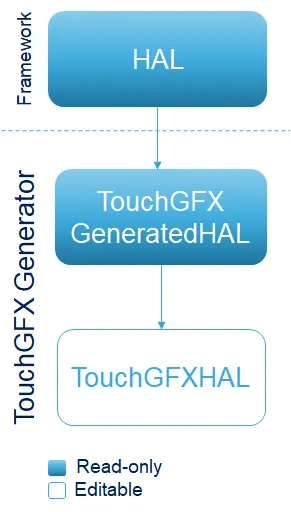

Abstraction Layer Classes

The HAL is accessed by the TouchGFX Engine through concrete sub-classes of HAL. These sub-classes are generated by the TouchGFX Generator. The generator, which is the primary tool for creation of the Abstraction Layer, can generate both the part of the HAL that reflects configurations from STM32CubeMX, as well as the OSAL for CMSIS V1 and V2. Please read the section on TouchGFX Generator for further details. Generally, the architecture of the HAL is in the following figure.

Synchronize TouchGFX Engine main loop with display transfer

The main idea behind this step is to block the TouchGFX Engine main loop when rendering is done, ensuring that no further frames are produced. Once the display is ready the OSAL signals the blocked Engine main loop to continue producing frames.

In order to fulfill this responsibility the typical way of a TouchGFX AL is to utilize the engine hook Rendering done and the interrupt Display Ready, as outlined in the previous section. The OSAL defines a function OSWrappers::signalVSync in which developers can signal the semaphore that the engine waits upon when it calls OSWrappers::signalVSync

Tip

Rendering Done

The Rendering done hook, OSWrappers::waitForVSync, is called by the TouchGFX Engine after rendering is complete.

When implementing this AL method, the AL must block the graphics engine until it is time to render the next frame. The standard method to implement this block is to perform a blocking read from a message queue. The HAL developer is free to use any method to implement the block if this is not feasible.

Tip

When OSWrappers::signalVSync is signaled (or the semaphore/queue used in OSWrappers::waitForVSync is signaled) TouchGFX will start rendering the next application frame. The following code based on CMSIS V2 causes the TouchGFX engine to block until an element is added to the queue by another part of the system, typically an interrupt synchronized with the display.

RTOS_OSWrappers.cpp

static osMessageQId vsync_queue = 0; //Queue identifier is assigned elsewhere

void OSWrappers::waitForVSync()

{

uint32_t dummyGet;

// First make sure the queue is empty, by trying to remove an element with 0 timeout.

osMessageQueueGet(vsync_queue, &dummyGet, 0, 0);

// Then, wait for next VSYNC to occur.

osMessageQueueGet(vsync_queue, &dummyGet, 0, osWaitForever);

}

If not using an RTOS, the TouchGFX Generator provides the following

implementation for waitForVSync using a volatile variable.

NO_OS_OSWrappers.cpp

static volatile uint8_t vsync_sem = 0;

void OSWrappers::waitForVSync()

{

while(!vsync_sem)

{

// Perform other work while waiting

...

}

}

Tip

Display ready

The Display ready signal to unblock the main loop should come from an interrupt from a display controller, from the display itself or even from a hardware timer. The source of the signal is dependent on the type of display.

The OSWrappers class defines a function for this signal: OSWrappers::signalVsync. The implementation of the function must unblock the main loop by satisfying the wait condition used in OSWrappers::waitForVSync.

Continuing from the above CMSIS RTOS example, the following code puts a message into the message queue vsync_queue which unblocks the TouchGFX Engine.

RTOS_OSWrappers.cpp

void OSWrappers::signalVSync()

{

if (vsync_queue)

{

osMessagePut(vsync_queue, dummy, 0);

}

}

This OSWrappers::signalVSync method must be called at hardware level from an interrupt for e.g. an LTDC, an external signal from the display, or a hardware timer.

If not using an RTOS use a variable and assign a non-zero value to break the while-loop.

NO_OS_OSWrappers.cpp

void OSWrappers::signalVSync()

{

vsync_sem = 1;

}

Report touch and physical button events

Before rendering a new frame, the TouchGFX Engine collects external input from the TouchController and ButtonController interfaces.

Touch Coordinates

Coordinates from the touch controller are translated into click-, drag- and gesture events by the engine and passed to the application. The following code is generated by the TouchGFX Generator:

TouchGFXConfiguration.cpp

static STM32TouchController tc;

static STM32L4DMA dma;

static LCD24bpp display;

static ApplicationFontProvider fontProvider;

static Texts texts;

static TouchGFXHAL hal(dma, display, tc, 390, 390);

During the TouchGFX Engine render cycle, when collecting input, the engine calls the sampleTouch() function on the tc object:

bool STM32TouchController::sampleTouch(int32_t& x, int32_t& y)

The implementation, provided by the AL developer, should assign the read touch coordinate values to x and y and return whether or not a touch was detected (true or false).

Tip

There are multiple ways of implementing this function:

- Polling in sampleTouch(): Read touch status from the hardware touch controller (typically I2C) by sending a request and polling for the result. This impacts the overall render time of the application as the I2C round-trip is often up to 1 ms during which the graphics engine is blocked.

- Interrupt based: Another possibility is to use interrupts. The I2C read command is started regularly by a timer or as a response to an external interrupt from the touch hardware. When the I2C data is available (another interrupt) the data is made available to the

STM32TouchControllerthrough a message queue or global variables. The code below fromSTM32TouchController.cpp(created by TouchGFX Generator) shows howsampleTouchcould look for a system with an RTOS:

STM32TouchController.cpp

bool STM32TouchController::sampleTouch(int32_t& x, int32_t& y)

{

if (osMessageQueueGet(mid_MsgQueue, &msg, NULL, 0U) == osOK)

{

x = msg.x;

y = msg.y;

return true;

}

return false;

}

The location of this file will be outlined in the next chapter on TouchGFX Generator

Other External Events

The Button Controller interface, touchgfx::ButtonController, can be used to map hardware signals (buttons or other) to events to the the application. The reaction to these events can be configured within TouchGFX Designer.

The use of this interface is similar to the Touch Controller above, except that it is not mandatory to have a ButtonController. To use it, create an instance of a class implementing the ButtonController interface, and pass a reference to the instance to the HAL:

MyButtonController.cpp

class MyButtonController : public touchgfx::ButtonController

{

bool sample(uint8_t& key)

{

... //Sample IO, set key, return true/false

}

};

TouchGFXConfiguration.cpp

static MyButtonController bc;

void touchgfx_init()

{

...

hal.initialize();

hal.setButtonController(&bc);

}

The sample method in your ButtonController class is called before each frame. If you return true, the key value will be passed to the handleKeyEvent eventhandler of the current screen.

Further reading

Synchronize framebuffer access

Multiple actors may be interested in accessing the framebuffer memory.

| 1 | CPU | Reads and writes pixels during rendering |

| 2 | DMA2D* | Reads and writes pixels during hardware assisted rendering |

| 3 | LTDC | Reads pixels during transfer to parallel RGB display |

| 4 | DMA | Read pixels during transfer to SPI display |

The TouchGFX Engine synchronizes framebuffer access through the OSWrappers interface and peripherals (e.g. DMA2D) that also wish to access the framebuffer must do the same. The normal design is to use a semaphore to guard the access to the framebuffer, but other synchronization mechanisms can be used.

The following table shows a list of functions in the OSWrappers class (OSWrappers.cpp) that can be generated by the TouchGFX Generator or manually by the user.

| Method | Description |

|---|---|

takeFrameBufferSemaphore | Called by graphics engine to get exclusive access to the framebuffer. This will block the engine until the DMA2D is done (if running) |

tryTakeFrameBufferSemaphore | Ensure that the lock is taken. This method does not block, but ensures that the next call to takeFrameBufferSemaphore will block its caller |

giveFrameBufferSemaphore | Releases the framebuffer lock |

giveFrameBufferSemaphoreFromISR | Releases the framebuffer lock from an interrupt context |

Tip

Report the next available framebuffer area

Regardless of rendering strategy TouchGFX Engine must know, in each tick, which memory area it should render pixels to. Using single- or double framebuffer strategies the TouchGFX Engine will write pixel data to a memory area according to the full width, height, and bit depth, of the framebuffer. The graphics engine takes care of swapping between the two buffers in a double buffer setup.

It is possible to limit the access to the framebuffer to part of the framebuffer. The method HAL::getTFTCurrentLine() can be reimplemented in your HAL subclass. Return the line number above which it is save for the graphics engine to draw.

Using a Partial Framebuffer strategy the developer defines one or more blocks of memory that TouchGFX Engine will use when rendering. Read more about that here.

Tip

Perform Render Operations

Rendering and displaying graphics are rarely the sole purposes of an application. Other tasks also need to use the CPU. One goal of TouchGFX is to draw the user interface using as few CPU cycles as possible. The HAL class abstracts the DMA2D found on many STM32 microcontrollers (or other hardware capabilities) and makes this available to the graphics engine.

When rendering assets such as bitmaps to the framebuffer, the TouchGFX Engine checks if the HAL has the capability to 'blit' a portion of- or all of the bitmap into to the framebuffer. If so, the drawing operation is delegated to the HAL rather than being handled by the CPU.

The engine calls the method HAL::getBlitCaps() to get a description of the capabilities of the hardware. Your HAL subclass can reimplement this to add the capabilities.

When the engine is drawing the user interface it will call operations

on the HAL class, e.g. HAL::blitCopy, that queue the operations for

the DMA. If the HAL does not report the required capability, the

graphics engine will use a software rendering fallback.

Tip

For many MCUs, TouchGFX Generator can generate a ChromART driver which adds the capability of several 'blit' operations using the ChromART chip.

Handle framebuffer transfer to display

In order to transfer the framebuffer to the display the hook "Rendering of area complete" is often utilized in a TouchGFX AL. The engine signals the AL once rendering of a part of the framebuffer has been completed. The AL can choose how to transfer this part of the framebuffer to the display.

Rendering of area complete

In code this hook is the virtual function HAL::flushFrameBuffer(Rect& rect).

On STM32 microcontrollers with LTDC controllers we don't need to do anything to transmit the framebuffer after every rendering. This happens continuously with a given frequency after the LTDC has been initialized and therefore we can leave the implementation of this method empty.

For other display types like SPI or 8080 you need to transfer the framebuffer manually.

The implementation of this function allows developers to initiate a manual transfer of that area of the framebuffer to a display with GRAM:

void TouchGFXHAL::flushFrameBuffer(const touchgfx::Rect& r)

{

HAL::flushFrameBuffer(rect); //call superclass

//start transfer if not running already!

if (!IsTransmittingData())

{

const uint8_t* pixels = ...; // Calculate pixel address

SendFrameBufferRect((uint8_t*)pixels, r.x, r.y, r.width, r.height);

}

else

{

... // Queue rect for later or wait here

}

}